Topic 2 - ROS and point clouds

Goals of the Class

In this class, you will learn the fundamentals and capabilities of ROS2 (Robot Operating System 2) by performing 2D SLAM (Simultaneous Localization and Mapping) and AMCL (Adaptive Monte Carlo Localization) using a simulated Turtlebot3 robot. Moreover, you will map the real 3D environment using data recorded from 3D LIDAR sensor. You will:

- Generate a 2D map of the robot’s environment using LIDAR data.

- Enable the robot to navigate autonomously within this known map.

- Explore how tweaking system parameters affects the performance of SLAM and AMCL algorithms.

- Extend your skills to 3D localization by creating a 3D map using a laser scanner and the lidarslam package.

Before You Begin

To complete the exercises, you’ll need two Docker images:

arm/lab03 (for 2D SLAM and navigation) and

arm/lab06 (for 3D SLAM). These images contain

pre-configured ROS2 environments with all required tools and

dependencies.

Check and Download Docker Images

Verify if the images are already on your computer:

docker imagesLook for arm/lab03 and arm/lab06 in the

output.

If they’re missing, download them:

For arm/lab03:

wget --content-disposition --no-check-certificate https://chmura.put.poznan.pl/s/pszNFePmGXxu1XX/downloadFor arm/lab06:

wget --content-disposition --no-check-certificate https://chmura.put.poznan.pl/s/B1td9ifRL1S0js9/downloadLoad the downloaded .tar.gz files into Docker:

docker load < path/to/file.tar.gzROS2 Introduction / Recap

The Robot Operating System (ROS) is a set of development libraries and tools for building robotic applications. ROS offers open-source tools ranging from sensor and robot controllers to advanced algorithms. Dedicated for advanced research projects, ROS1 could not be utilized for industrial applications. It was limited by weaknesses related to message access security and the lack of adaptation to the requirements of real-time systems. The second generation, ROS2, was redesigned to meet these challenges. The differences between the versions are described in article. The first distribution of ROS2 came out in late 2017, Ardent Apalone, which significantly extends the functionality of ROS1. We will use one of the latest stable versions, Humble Hawksbill.

Key ROS2 Concepts

Here’s a breakdown of essential terms:

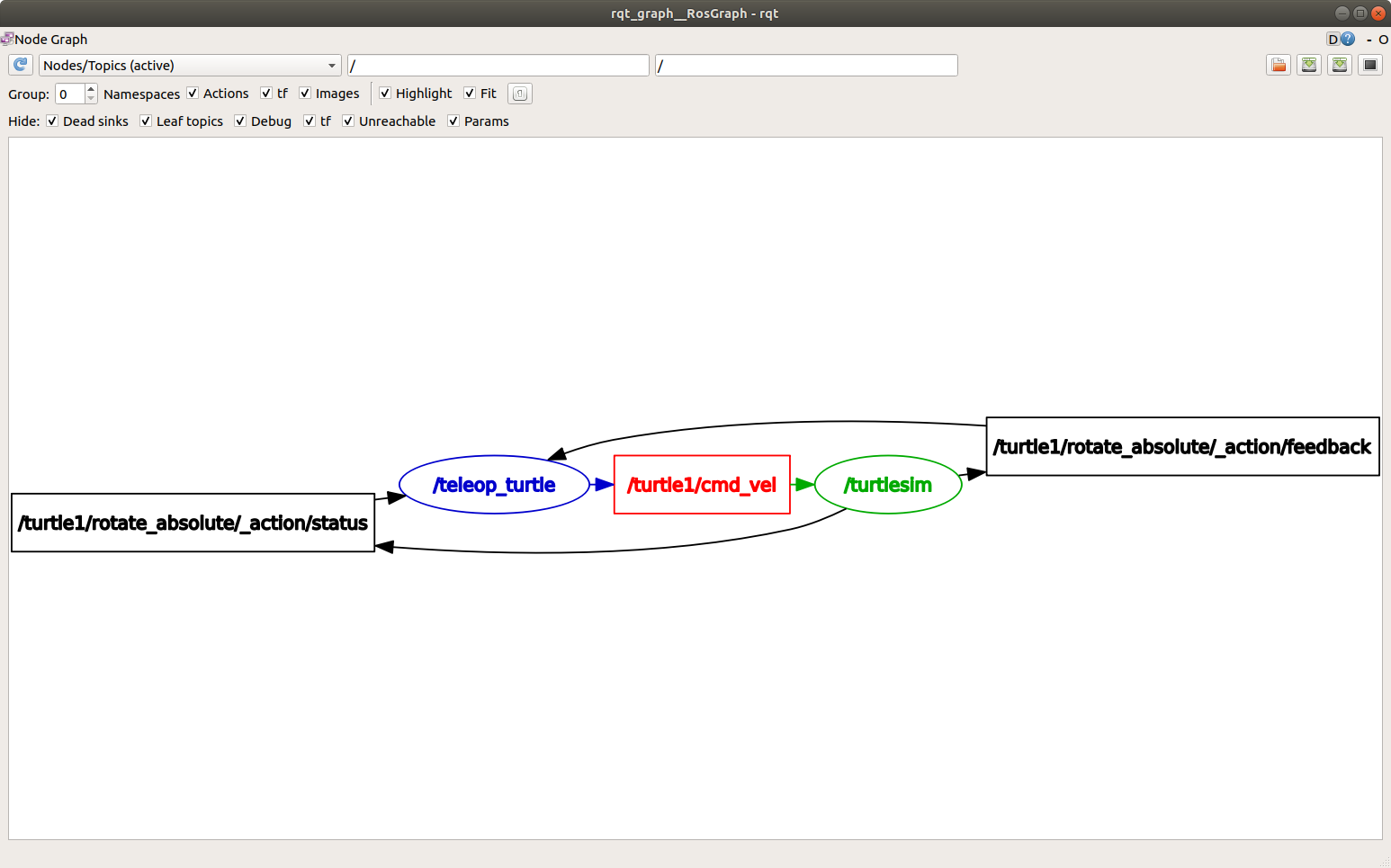

- Nodes: Small, independent programs that perform specific tasks (e.g., reading a sensor or moving a motor). Nodes communicate within a ROS graph, a network showing how they exchange data.

- Topics: Channels where nodes send and receive data

using a publish/subscribe model. For example, a node might publish a

robot’s position to the

/robot/positiontopic, while another subscribes to display it. - Messages: Structured data sent over topics, defined

in

.msgfiles (e.g.,geometry_msgs/msg/Twistfor velocity commands). - Services and Actions: Ways for nodes to request data or trigger tasks with feedback, unlike the one-way communication of topics.

- Discovery: The automatic process where nodes find and connect to each other on the network.

ROS2 Environment (Workspace)

A ROS environment is the place where packages are stored, e.g. for a particular robot. There can be many different environments on one computer (e.g. ros2_robotA_ws, ros2_robotB_ws). A typical workspace looks like this:

ros2_ws

├── build/ # Temporary files for building packages

├── install/ # Compiled packages ready to use

├── log/ # Build process logs

└── src/ # Your source code and packagesBuilding the Workspace

Use the colcon

tool to build the workspace:

cd ros2_ws

colcon buildTo build a specific package:

colcon build --packages-select package_nameActivating the Workspace

After building, “source” the environment to access your packages in the terminal:

source install/setup.bashNote: Run this command in every new terminal session to work with your workspace.

Packages

A package is a modular unit containing nodes, libraries, and configuration files. For example, a Python package might look like:

my_package/

├── my_package/ # Nodes and scripts

├── setup.py # Installation instructions

├── setup.cfg # Executable details

├── package.xml # Package info and dependencies

└── test/ # Automated testsThe organization of packages is done by using ament,

an evolution of catkin

known from ROS1. With this tool, packages are structured.

Create a package with: - C++:

ros2 pkg create --build-type ament_cmake package_name- Python:

ros2 pkg create --build-type ament_python package_nameAdd dependencies using --dependencies, e.g.,

--dependencies rclpy sensor_msgs.

Package Dependencies Management

A tool that significantly improves the work of the ROS developer is

rosdep.

It allows the automatic installation of dependencies (packages,

libraries) of all packages in a given environment. Thanks to the fact

that the dependencies are defined in the package.xml file,

there is no need to install them manually.

To use rosdep call the following commands inside the

root directory of the ROS2 environment

(e.g. ~/ros2_ws):

sudo rosdep init

rosdep update

rosdep install --from-paths src -y --ignore-src --rosdistro humbleThese commands initialise rosdep and then update the

local indexes from the rosdistro package database. The last

command installs the dependencies. The --from-paths src

argument tells you to look for package.xml files inside the

src directory, -y causes console messages to

be automatically accepted, and --ignore-src omits packages

located in the src directory from the installation (since

they will be built by us).

Many ready-made packages are located in the ROS repository - rosdistro, each

user has the possibility to add his package to it via pull

request.

Node Operations

Starting nodes is done via the command:

ros2 run package_name node_nameTo get the current list of nodes:

ros2 node listTo obtain node information:

ros2 node info <node_name>It is possible to group nodes allowing them to be run collectively.

The launch files are used for this. Invoking an existing

launch file is done by the command:

ros2 launch package_name launch_nameIn ROS2, launch files can take one of three extensions,

.xml, .py or .yaml. The

.py extension is recommended due to the flexibility of this

language. For more information, see the file reference launch.

Topic Operations

Viewing the current list of topics is done using the command:

ros2 topic listList of topics with their associated message types:

ros2 topic list -tReading messages from the topic:

ros2 topic echo topic_nameA single topic can have multiple publishers as well as subscribers. Information about them, as well as the type of message being exchanged, can be checked with the command:

ros2 topic info topic_nameIt is also possible to publish messages on a topic from the terminal:

ros2 topic pub topic_name message_type 'message_data'Example:

ros2 topic pub --once /turtle1/cmd_vel geometry_msgs/msg/Twist "{linear: {x: 2.0, y: 0.0, z: 0.0}, angular: {x: 0.0, y: 0.0, z: 1.8}}"To publish a message once, you can use the --once

argument.

To read the frequency with which data is published on a topic:

ros2 topic hz topic_nameUseful Tools

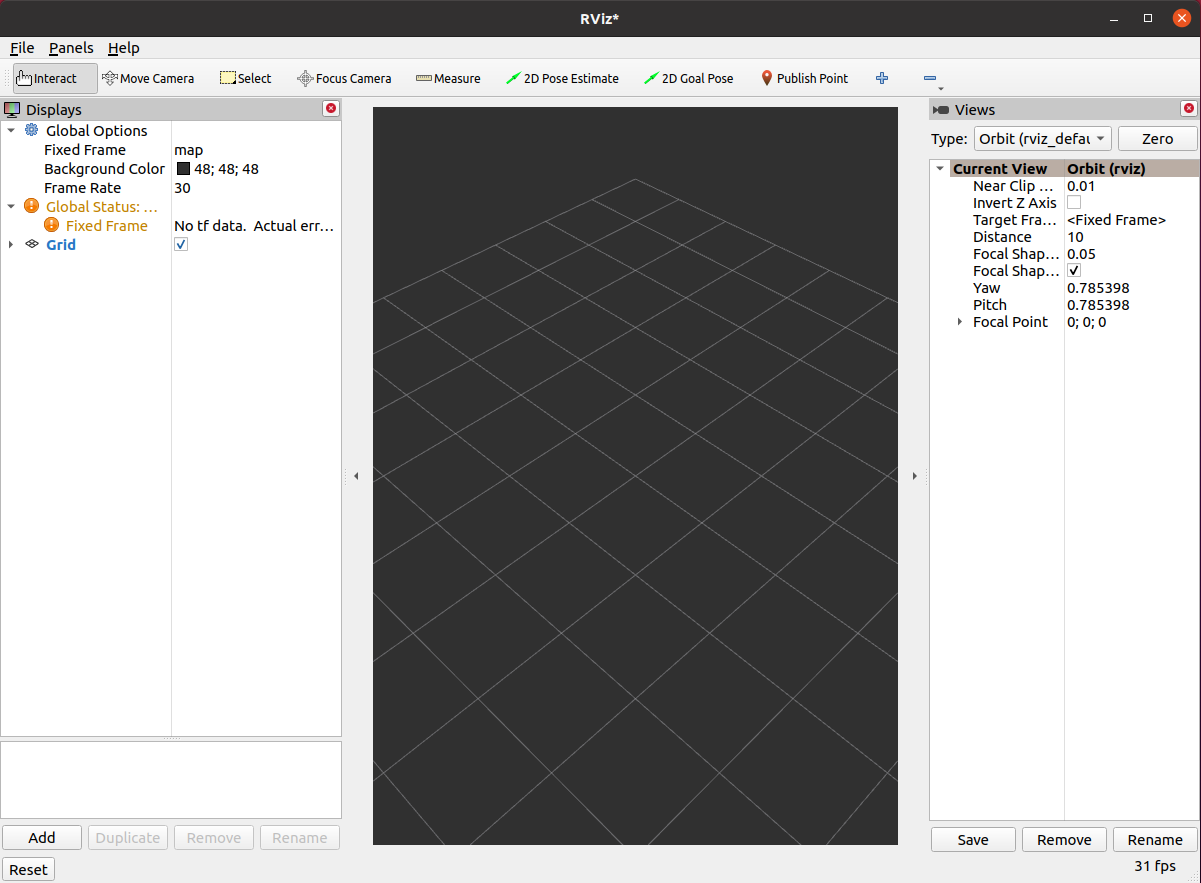

- RViz: A 3D visualization tool to display sensor data and robot models:

rviz2

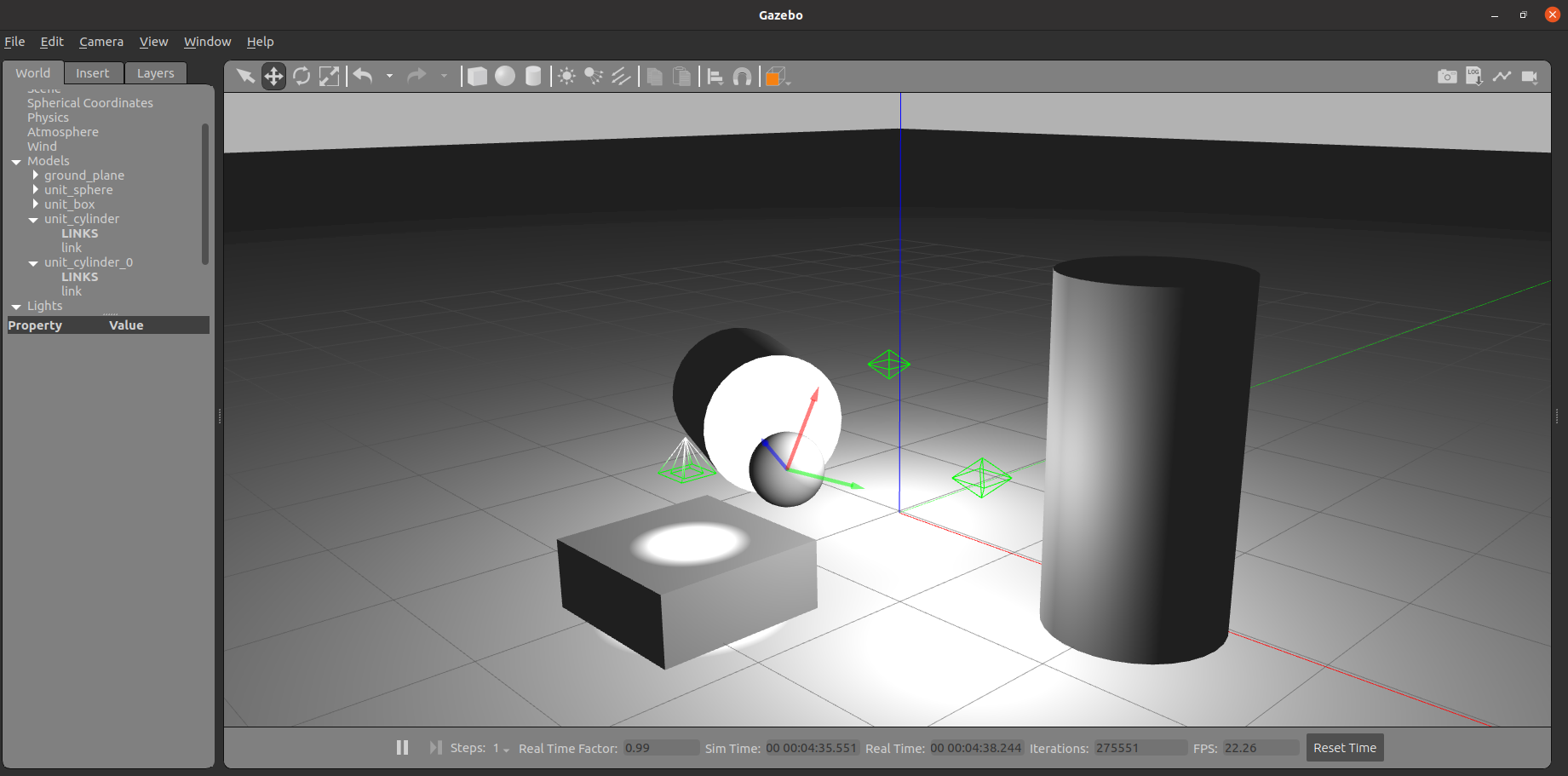

- Gazebo: A simulation environment to create a working environment for your robot and simulate its interaction with objects:

gazebo

- rqt_graph: A tool for visualizing the ROS graph:

rqt_graph

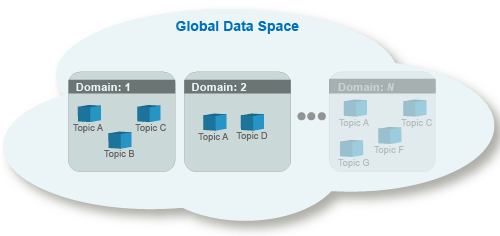

Multi-Computer Setup

The standard used by ROS2 for communication is DDS. In DDS there is the concept of “domains”. These allow the logical separation of connections within a network.

Nodes in the same domain can freely detect each other and send messages to each other, whereas nodes in different domains cannot. All ROS2 nodes use domain ID 0 by default. To avoid interference between different groups of ROS2 computers on the same network, a different domain ID should be set for each group (by setting ROS_DOMAIN_ID environment variable).

Within the laboratory, it is necessary to set a separate unique

domain ID for each computer. To do this, read the number from the

sticker stuck to the monitor and substitute it in the following command

in place of NR_COMPUTER. If there is no sticker on your

computer, select a number between 0-101 or 215-232.

grep -q ^'export ROS_DOMAIN_ID=' ~/.bashrc || echo 'export ROS_DOMAIN_ID=NR_COMPUTER' >> ~/.bashrc

source ~/.bashrcThe above command will set the indicated domain ID in each terminal window. This will prevent nodes from being visible between different computers on the same network.

ROS2 Bag: Recording and Playback

ROS2 Bag lets you record and replay topic data, useful for testing without a live robot:

- Record:

ros2 bag record /topic1 /topic2 - Play:

ros2 bag play bag_name -r 0.5(half speed) - Info:

ros2 bag info bag_name

Part 1: 2D Point Cloud SLAM and Navigation in a Simple Environment

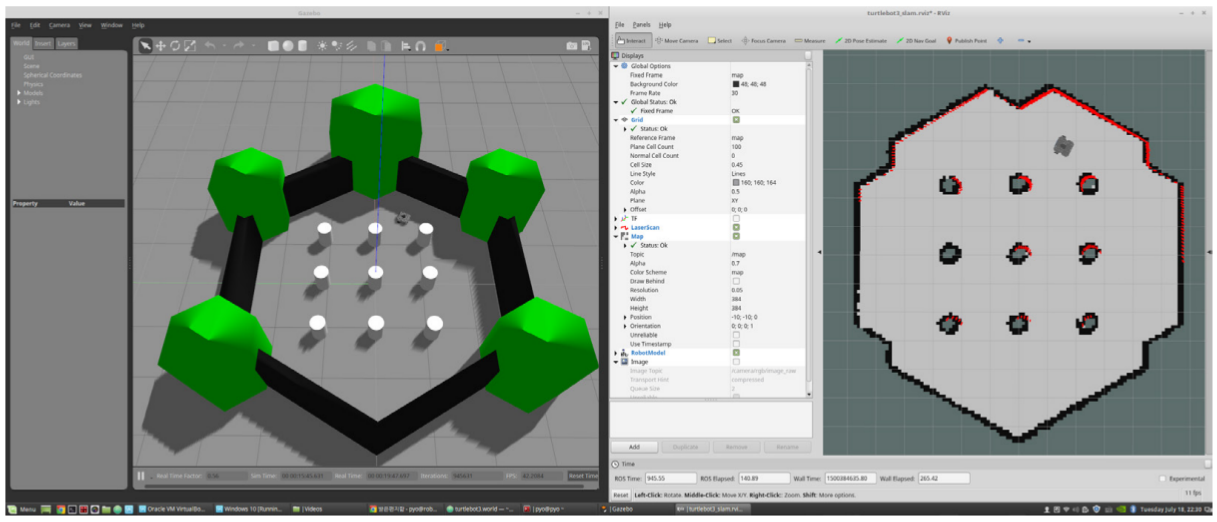

In this part, you’ll use a simulated Turtlebot3 to build a 2D map with SLAM and navigate it with AMCL.

Key Concepts

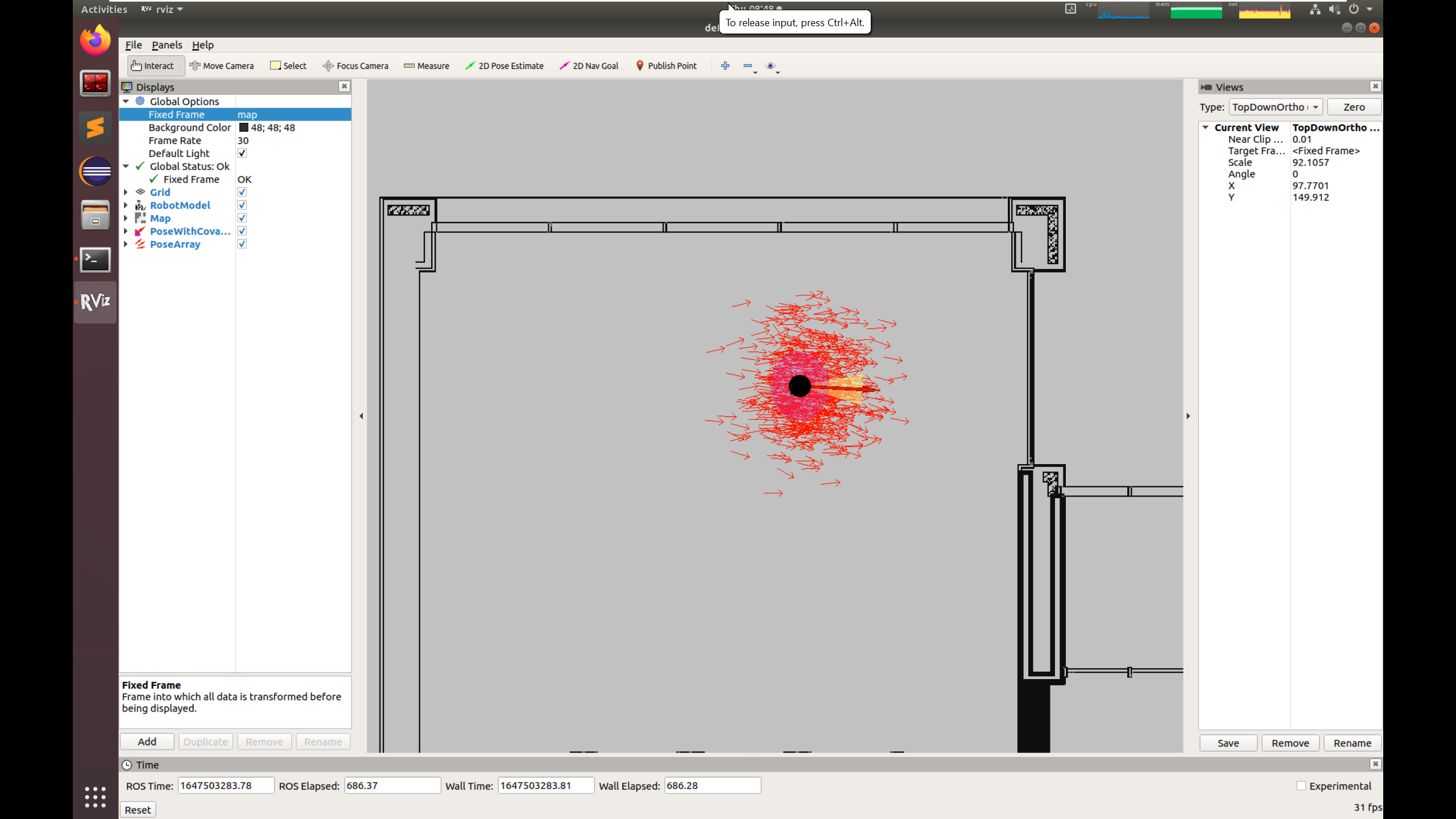

- SLAM (Simultaneous Localization and Mapping): A technique where a robot builds a map of an unknown area while tracking its position. Here, we will use Cartographer, a Google-developed SLAM system that creates maps from LIDAR data using graph optimization.

- AMCL (Adaptive Monte Carlo Localization): A method to locate a robot on a known map using particle filter. AMCL represents the robot’s possible positions as a set of particles, where each particle is a hypothesis of the robot’s location with an associated weight reflecting its likelihood. The algorithm updates these particles based on the robot’s motion (using a motion model) and sensor data (using a sensor model), then resamples them to focus on the most probable positions.

- Point Cloud: A collection of 3D points (x, y, z)

from sensors like LIDAR, representing the environment’s shape.

Environment preparation

- Load the Docker Image: Use

arm/lab03(see Before You Begin). - Create a Contrainer:

- only CPU: Download and run run_cpu.sh

- GPU: Download and run run_gpu_nvidia.sh

wget <script_url>

./run_*.shThe container is named ARM_03 by default.

- Allow the container to display GUI applications (different terminal, on a host machine):

xhost +local:root- Build the Workspace:

cd /arm_ws

source /opt/ros/humble/setup.bash

colcon build --symlink-install

source install/setup.bashNOTE: You can attach a new terminal to the container using the following command:

docker exec -it ARM_03 bash

IMPORTANT:

Make sure to source the built environment and set the robot model in every terminal inside the container:

source install/setup.bash; export TURTLEBOT3_MODEL=burgerSet the environment variable

ROS_DOMAIN_IDin a container as instructed here.

Building the World Map

- Set the Turtlebot3 Model:

export TURTLEBOT3_MODEL=burgerAvailable options are: burger, waffle and

waffle_pi.

- Launch Gazebo Simulation:

ros2 launch turtlebot3_gazebo turtlebot3_world.launch.py- Run Cartographer SLAM (new terminal):

export TURTLEBOT3_MODEL=burger

source install/setup.bash

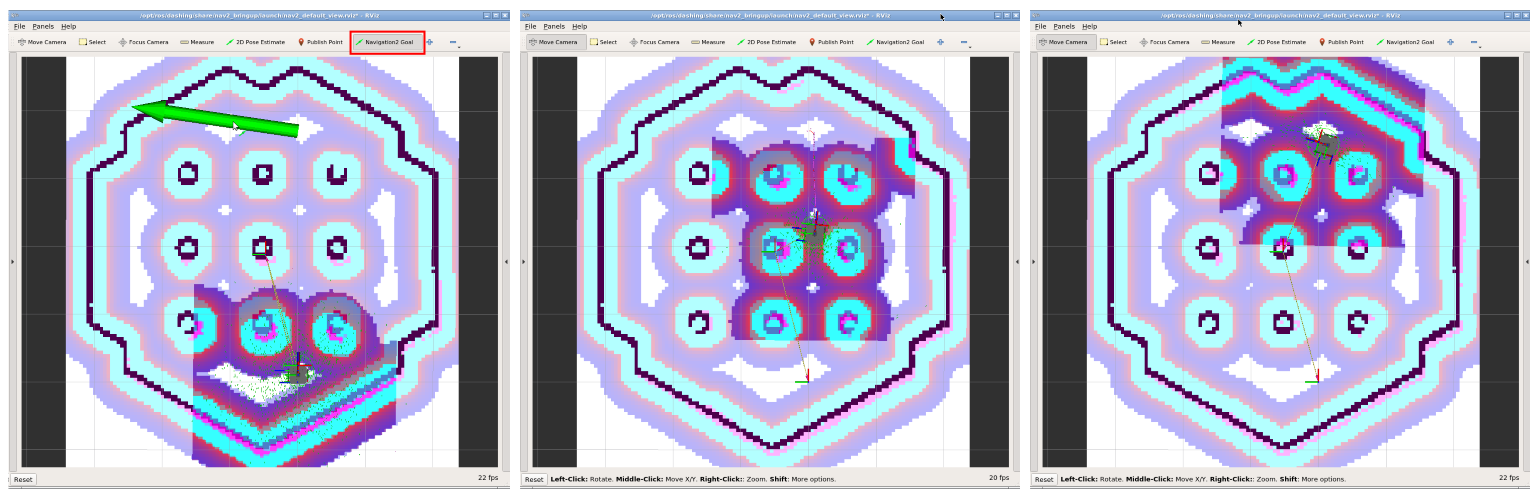

ros2 launch turtlebot3_cartographer cartographer.launch.py use_sim_time:=TrueRViz will show the map-building process.

- Move the Robot with the

teleopnode for keyboard operation (new terminal):

ros2 run turtlebot3_teleop teleop_keyboardThen, using the keys w, a, s,

d and x, you need to control the robot so that

the entire “world” map is built.

- Save the Map:

cd /arm_ws

mkdir maps

ros2 run nav2_map_server map_saver_cli -f /arm_ws/maps/turtlebot3_world_mapThis creates a .yaml and .pgm file

representing the map.

- Turn off the Simulation with

Ctrl+Cin all terminals.

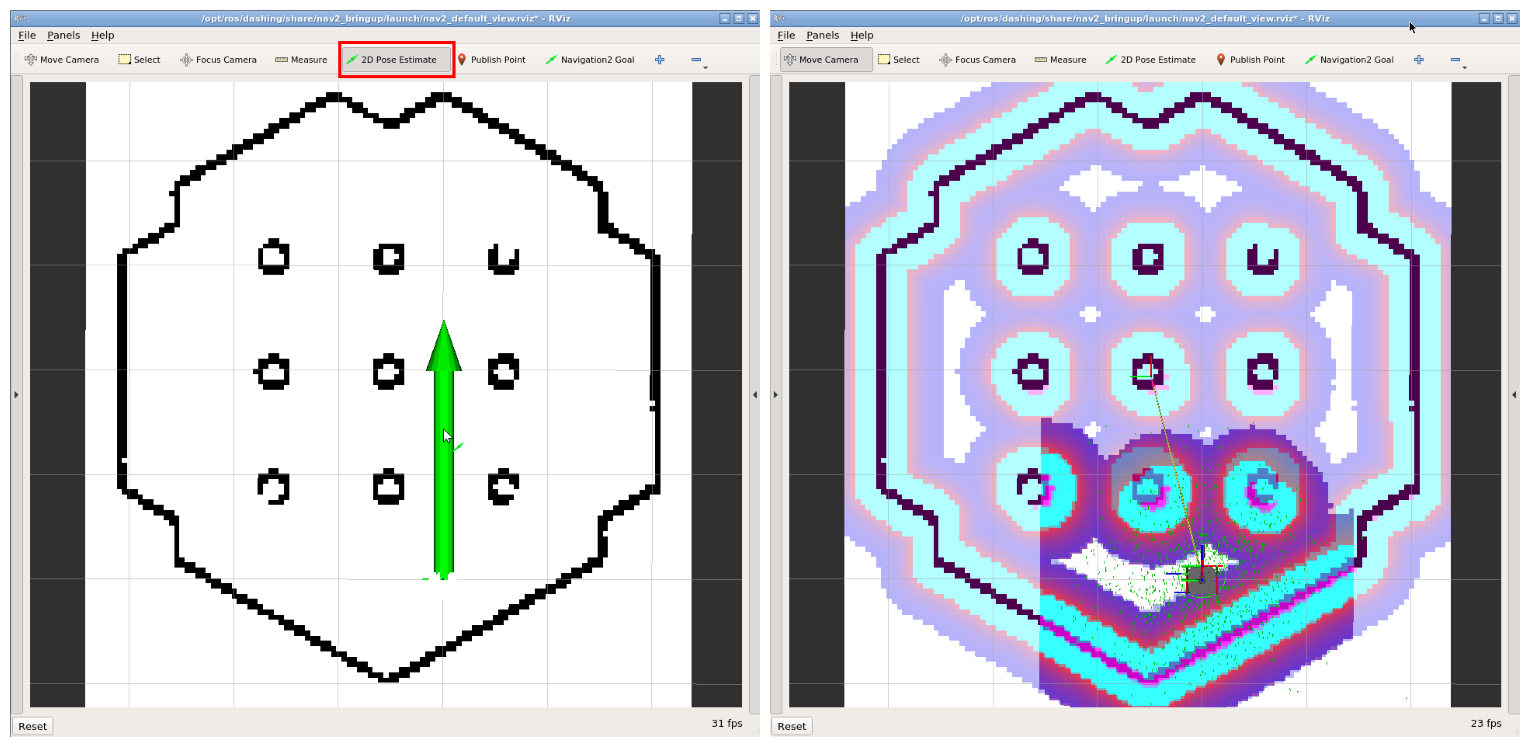

Navigating using the map

- Launch Gazebo Simulation:

export TURTLEBOT3_MODEL=burger

ros2 launch turtlebot3_gazebo turtlebot3_world.launch.py- Launch Navigation2 Node with AMCL method (new terminal):

ros2 launch turtlebot3_navigation2 navigation2.launch.py use_sim_time:=True map:=/arm_ws/maps/turtlebot3_world_map.yamlNavigation2 is a ROS2 package for path planning and obstacle avoidance.

- Set Initial Pose in RViz:

- Click

2D Pose Estimatein the RViz window. - Click on the map in the place where you think the robot is located and drag it in the direction of its front.

- Repeat until the particle cloud aligns with the robot’s actual location.

- Set Navigation Goal:

- Click

Navigation Goalin RViz window. - Click and drag to set a destination. The robot will plan and follow a path.

Play with Parameters

In the turtlebot3_navigation2 package, in the

param folder

(/arm_ws/src/turtlebot3/turtlebot3_navigation2/param), there is a file

burger.yaml. Verify how the modification of the following

parameters affects the AMCL module operation:

beam_skip_distancewhendo_beamskipis set toTruelaser_max_rangeandlaser_min_rangemax_beamsmax_particlesresample_intervalupdate_min_aandupdate_min_d

Part 2: 3D Point CLoud SLAM

Now, you’ll use lidarslam to build a 3D map from LIDAR data and analyze its performance.

lidarslam: A ROS2 package for 3D SLAM, creating maps and trajectories from point clouds. It uses scan matching method to calculate the relative transformation between consecutive LIDAR scans to get the initial estimate of the motion (NDT by default). Moreover, it refines the intial pose estimates and ensures long-term consistency of the map by graph-based pose optimization. It includes loop closure mechanism.

Environment Preparation

- Load the Docker Image: Use

arm/lab06(see Before You Begin). - Create a Container:

- only CPU: Download and run run_cpu.sh

- GPU: Download and run run_gpu_nvidia.sh

wget <script_url>

./run_*.shThe container is named ARM_06 by default.

- Build the Workspace:

cd /arm_ws

source /opt/ros/humble/setup.bash

colcon build --symlink-install

source install/setup.bashNOTE: You can attach a new terminal to the container using the following command:

docker exec -it ARM_06 bash

Running lidarslam

cd /arm_ws

source install/setup.bash

ros2 launch lidarslam lidarslam.launch.pyRViz window should appear, where the localization and map building process will be visualized.

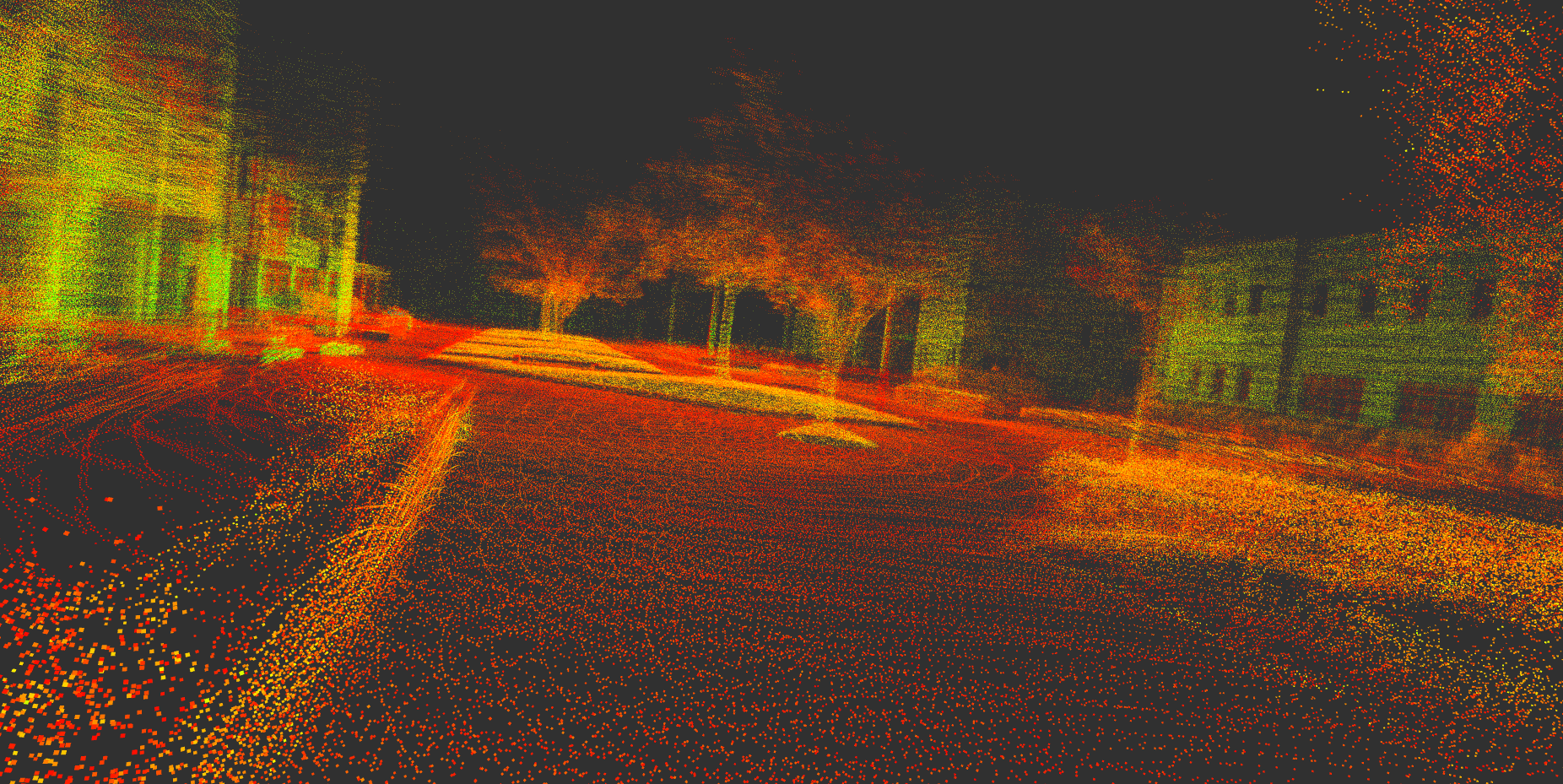

HDL_400

Play back the data recorded using Velodyne VLP-32 LIDAR sensor.

- Play the bag file:

ros2 bag play -p -r 0.5 bags/hdl_400The replay process will start paused and with a rate of

0.5 of the normal speed.

Add a

PointCloud2data type to the visualization from the/velodyne_pointstopic in RViz. It contains the “current” readings from the LIDAR.Unpause the replay process of bag file by using space key in the appropriate terminal.

Observe the difference between maps from

/maptopic (raw map) and/modified_maptopic (optimized map). Similarly observe the difference between/pathand/modified_pathtopics. Unfortunately, there is no ground truth localization for this data, but you can see the map optimization process based on loop closure mechanism.Loop closure is a technique in SLAM where the system recognizes when the robot has returned to a previously visited location. When a loop closure is detected, the system can correct accumulated drift errors by adjusting the entire trajectory and map. This results in a more accurate and consistent map, especially for long trajectories where odometry errors would otherwise accumulate.

KITTI 00

A bag file with 200 first scans from the 00 sequence of the KITTI dataset was prepared. The data also contain ground truth localization, which can be used to assess the system performance.

- Restart lidarslam:

ros2 launch lidarslam lidarslam.launch.py- Play the bag file:

ros2 bag play -p bags/kittiAdd a

Pathdata type to the visualization from the/path_gt_lidartopic in RViz. Additionally, change it’s color to distinguish it from different paths (yellow and green).Unpause the replay process of bag file by using space key in the appropriate terminal.

Observe the difference between the

ground truthline and the path returned by SLAM.Repeat the experiment for

-requal to 0.3. What happens this time?

Play with the SLAM parameters

Analyzing the lidarslam

documentation and source code, and observing the system operation,

please verify the impact of the following parameters from the

/arm_ws/src/lidarslam_ros2/lidarslam/param/lidarslam.yaml

file:

ndt_resolutiontrans_for_mapupdatemap_publish_periodscan_periodvoxel_leaf_sizeloop_detection_periodthreshold_loop_closure_scoredistance_loop_closurerange_of_searching_loop_closuresearch_submap_num